Few weeks ago, I was working on adapting certain pieces of an existing SSIS framework created with an earlier version of SSIS to make it work with SSIS 2012.

In this post, I want to share some of my findings and design notes around auditing-logging-re-cover ability of that ETL framework and how I got them to play along with SSIS 2012 and the project deployment model.

An old good practice: ETL execution logging

It is a general good practice to generate and capture an ‘adequate’ level of execution logging information in your ETL applications or systems. The idea is that ETL developers and admins can use that information to determine if and when a processes has started, if and when it fails, and when it completes. From there, one can start doing more interesting things such as creating reports to monitor the execution of processes, develop auditing capabilities – e.g. what instance of a process touched what rows –, and even use this information to create a custom processes/package restart and recovery capabilities.Almost every ETL framework I have seen and worked have functionality embedded, and it is typically implemented inside of the control flow of the packages using a pattern like this:

Log begging of process and generate ID>> process logic >> Log end of process

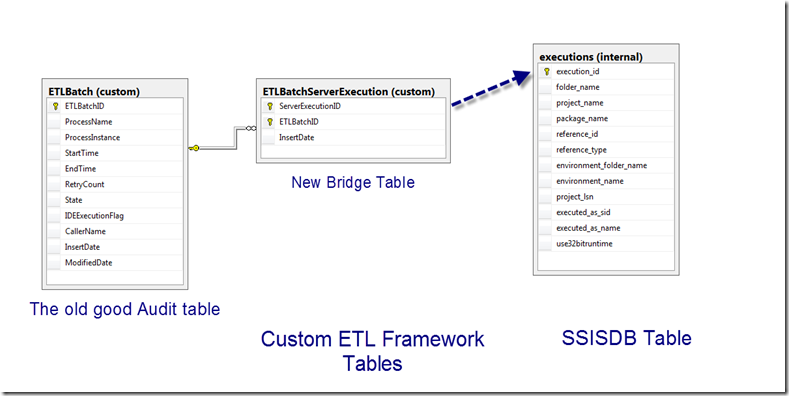

Typically, this is done at the master package level and in many cases something similar is done inside of each child package. In every case, an unique ID is generated as soon as the main (or master) package starts. This ID is the logical unit of ETL process execution. Under my design, no ID is generated until the previous one has been marked as completed – even if the process is executed multiple times due to failures.People use different names for this ID or unit of work: run_id, application_instance_id, ETL_control_id, etc. In this reincarnation of ETL framework I am working on these days, I call it ETLbatchID, and I use a table like the one in the picture below to store them and keep track of them

As you see, This table not only gives me the ID, but also provides other important information such as start time, end time and the number of time the process was re-started.

But wait, do we still need this technique in SSIS 2012? I heard logging is automatic…

In my case, the short answer is yes. See, SSIS 2012 brings a great deal of manageability and auditing improvements that automatically tracks and logs execution of packages. Each time we run a package in the SSIS server, an execution_id is generated and logged in SSISDB – the SSIS catalog. It even goes as far as associating that execution_id to all executable – such as tasks, children packages - that ran under that package.Though this is great, it has its caveats. One of the problems is that SSIS 2012 server issues a new execution_id each time you execute a package, so if the process fails and I restart the master package , I would get a second execution_id, hence having to track 2 different ID values without having an straightforward way to group them. If you are curious, just run queries against the executions view in SSIDB as you run packages: [catalog].[executions] view in SISDB database.The way most ETL frameworks work, there needs to be control over the unit of auditing of the ETL processes. For instance, all sub process and records affected by an execution of the ETL process can be identified using an unique ID, even if the process has to be run multiple times (e.g failures).

Bringing the 2 together

Since I wanted to keep the same level of control over the ETL auditing unit of my old framework, but still take advantage of the goodies SSIDB offers me, I ended up creating a bridge table that will associate the custom ETLBatchIDs with the SSIDB execution_ids and extending the framework’s logging process to populate it via execute sql tasks.

Notice that I did not add a FK constraint between the custom tables and the SSISDB (dotted line) as I wanted to minimize the impact this could have had over SSIDB cleanup processes.

Whit this setup, I now have the ability to keep the unit of work/auditing the user was used to – ETL Batch ID – but still be able to join to the built-in SSIDB execution tables and views, which open the door to a richer ETL auditing and reporting experience. For instance, I am able to see how many SSISDB executions_IDs were required before a given ETLBatchID was completed and access the execution details of each one of them, like in the case of ETLBatchID=1007 below

Notice that I still have a lot of testing to do, so if you decide to use this approach, use it at your own risk. On the other, I would love to hear your feedback if you know of a different way of doing this, or if you catch any flaws in my approach.

-rafa

This comment has been removed by the author.

ReplyDeleteHi Rafael,

ReplyDeleteWhat's your thoughts on this subject after almost a year?

I'm starting a new greenfield project in several weeks time and have a chance to introduce a new framework. With previous version my approach would be similar to yours (I think) which is "control table" with a process and a list of packages which then would use a single master ssis package to get all packages for a given process and execute each child package (for each loop) with given batchid and it seems your approach above is something I will have to do get process level info which is very valuable.

Any thoughts on managing deployment or project with SSIS 2012? Now that we have to deploy ALL or nothing it seems I would feel more comfortable by doing "diff" between source code and server files (like with SQL Deployment).

Another question is do we keep all SSIS package in one massive project or multiple ones? I think there is no way to logically group it in folder etc so my initial thought is to prefix package (extract (if used), staging, load etc).

Take care

Emil

Hi Emil,

ReplyDeleteThanks fro you comment and questions.

The approach depicted above is working great for me so far. Where I would defer is on using a single master package and a for each process t execute the children packages, mainly as I want to parallelize the execution of children packages, but I have met people that don't mind that as it provides them with a finer control and cleaner master package.

Regarding the all/nothing deployment model of the project, there is a lot of people complaining about that still. The best recommendation I have if to leverage your source control process to gate/label what needs to be deployed. Because of that I would attempt to also define smaller projects - perhaps you can decouple the extract packages by source system from the rest.

Cheers,

Rafa

Hi Rafa,

ReplyDeleteWhat's your view on Project Parameters vs Connection Manager? It seems connection manager behaves like it should, so I'm wondering maybe I should change my old habits?

I currently don't see many differences apart from that it would have to be deployment/managed in a slightly different way. I wonder if there are any significant pros/cons?

Take care

Emil

I made some tests and decided to use both.

DeleteReasons:

1) It is available on every package by default (don't need to add it)

2) It can use Project Parameter (I use required = true to explicitly specific new environment during deployment)

NOTE: If we change "Parameterize" dialog box (inside a package) from don't use or use parameter than it only affects connection manager item (solution explorer), that means that we can use any package to change it and it will not update package code (and other packages code) and will only update connection manager code file... hope that makes sense.

Take care

Emil

It seems my previous comment wasn't published.... let me start again... thanks for reply and I might try BI xPress (Pragmatic Works) just so I can quickly start without worrying with testing of new framework etc.

ReplyDeleteHi Emil, I'm in the process of setting up an auditing and logging framework for a new data warehouse project. I'm using SQL 2012 and am just discovering the SSISDB features. Regarding your post - I'm interested to know what your thoughts were about BI xPress as an auditing and logging framework?

DeleteAny input would be appreciated :-)

Cheers, Stuart

Hi Katie,

ReplyDeleteI just recently finished an SSIS 2012 project and also did some experimentation as where/how to parameterize connection manager. I settled with connection managers at the project level as I did not have that many and a great number of packages will use them. I did not explicitly parameterized them as connection managers are exposed and ready to be configured at execution time, so that saved me on having to declare additional parameters. I short, I bind env. variables directly to connection manager properties. Thanks for sharing your experience. I may do a post on this topic soon as I am too also interested on what other peoples experiences are.

Hi Rafa,

ReplyDeleteInteresting, I didn't know you could do that. There are very few differences so I presume it will be personal preferences.

I tried connection manager without project parameters but come across this aspects:

1) I cannot leave it blank? With parameter required it will be blank, so ensures that it is explicitly configured.

2) It seems it accepts one property at a time.. In most cases it is fine as only server name changes but recently I try to include Packet Size=32767 in oledb connection string (performance), and I don't see this property available in UI?

3) For some reason I don't see "folder" connection in connection manager.

One drawback I found is when I have multiple flat connection which makes it less readable but I presume I could go with one folder and use local variable to access folder, the only drawback would be when a folder is moved to completely different path which is when I would have to introduce new file connection and have to change package itself to use new file connection .

Take care

Emil