Have you ever had the need to create summary and detailed reports to understand the results of a SSIS upgrade exercise such as packages by type of errors and warnings; the number of packages that upgraded successfully/unsuccessfully?

Upgrading SSIS packages is in most cases a straightforward exercise using the SSIS upgrade wizard. However, the output file produced by the wizard with the upgrade results (warning, errors, upgrade status, etc.) is very verbose and formatted in a way that makes it very hard to consume when upgrading a large number of packages.

I recently experienced this pain when working in a project that required to upgrade more than 1,800 SSIS packages that produced an upgrade output file with more than 35,000 lines worth of messages! See snippet of the output file below.

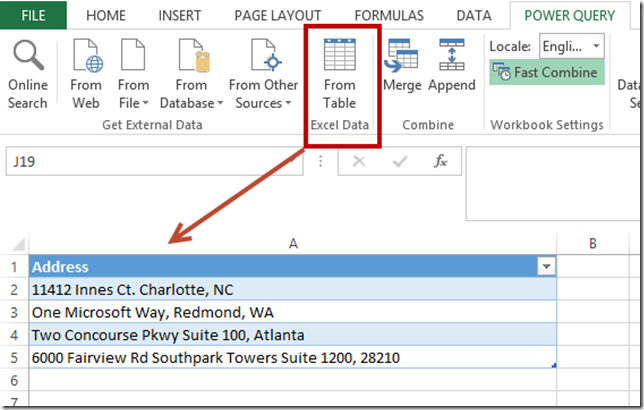

Since I typically use the output file to get a better sense of the effort involved to complete the upgrade of all packages, I quickly realized that for this project I would need to come up with a way to consume such a large output file. Fortunately, I was able to create an excel report like the one below using Power Query in a very simple way. As you can see below, it is very easy to get a sense of how difficult the upgrade is going to be. In the example below, I can quickly see there are only 14 packages that had errors ad 599 with warnings. Additionally, I can easily get a break down of the type of errors and the get the list of packages by message code/type.

If you are interested in creating a similar report, you can download a copy of a report template I created: SSISUpgradeResultsReport Template.xlsx

Note: Make sure you have Power Query for Excel installed and you edit pqSSISUpgradeReport Power Query query to point to the location where your SSIS upgrade wizard output file is stored ( See query below)

let

Source = Table.FromColumns({Lines.FromBinary(File.Contents("D:\SSIS\SSIS Upgrade report all packages 2013 10 09.txt"))}),

FilteredRowsBlanks = Table.SelectRows(Source, each ([Column1] <> "" and [Column1] <> "#(tab)" and [Column1] <> "#(tab)Messages" and [Column1] <> "The Upgrade is Complete")),

InsertedCustom = Table.AddColumn(FilteredRowsBlanks, "Custom", each if Text.Range([Column1],0,19)="- Upgrading package" then [Column1] else null),

FillDown = Table.FillDown( InsertedCustom,"Custom"),

FilteredRows1 = Table.SelectRows(FillDown, each not Text.Contains([Column1], "- Upgrading package")),

SplitColumnDelimiter = Table.SplitColumn(FilteredRows1,"Custom",Splitter.SplitTextByEachDelimiter({"("}, null, true),2),

ReplacedValue = Table.ReplaceValue(SplitColumnDelimiter,")","",Replacer.ReplaceText,{"Custom.2"}),

ReplacedValue1 = Table.ReplaceValue(ReplacedValue,"- Upgrading package","",Replacer.ReplaceText,{"Custom.1"}),

SplitColumnDelimiter1 = Table.SplitColumn(ReplacedValue1,"Custom.1",Splitter.SplitTextByEachDelimiter({"\"}, null, true),2),

RenamedColumns = Table.RenameColumns(SplitColumnDelimiter1,{{"Column1", "Mesage"}, {"Custom.1.1", "Folder"}, {"Custom.1.2", "PackageName"}, {"Custom.2", "UpgradeResult"}}),

ReorderedColumns = Table.ReorderColumns(RenamedColumns,{"Folder", "PackageName", "UpgradeResult", "Mesage"}),

SplitColumnDelimiter2 = Table.SplitColumn(ReorderedColumns,"Mesage",Splitter.SplitTextByEachDelimiter({":"}, null, false),2),

RenamedColumns1 = Table.RenameColumns(SplitColumnDelimiter2,{{"Mesage.1", "Message Code"}, {"Mesage.2", "Message"}}),

TrimmedMessageCode = Table.TransformColumns(RenamedColumns1,{{"Message Code", Text.Trim}}),

Lowercase = Table.TransformColumns(TrimmedMessageCode,{{"Message Code", Text.Trim}, {"PackageName", Text.Lower}})

in

Lowercase